To Learn a New Technology: Generative AI or Human Teachers? By Ed Lyons

Image by Ed Lyons with Midjourney

I have known several developers who have learned new technologies using Generative AI. They have described it as a tremendous tool for learning required patterns, and enjoyed having a “pair programmer” next to them while they are using that technology.

But what are the tradeoffs using AI to learn and master a technology? Does it work well for everyone? When should you turn to humans for instruction instead of AI?

AN EXPERIMENT

I have used both AI and traditional instruction to learn new technology in the past year, but to better understand differences, I tried each in isolation for learning a new framework.

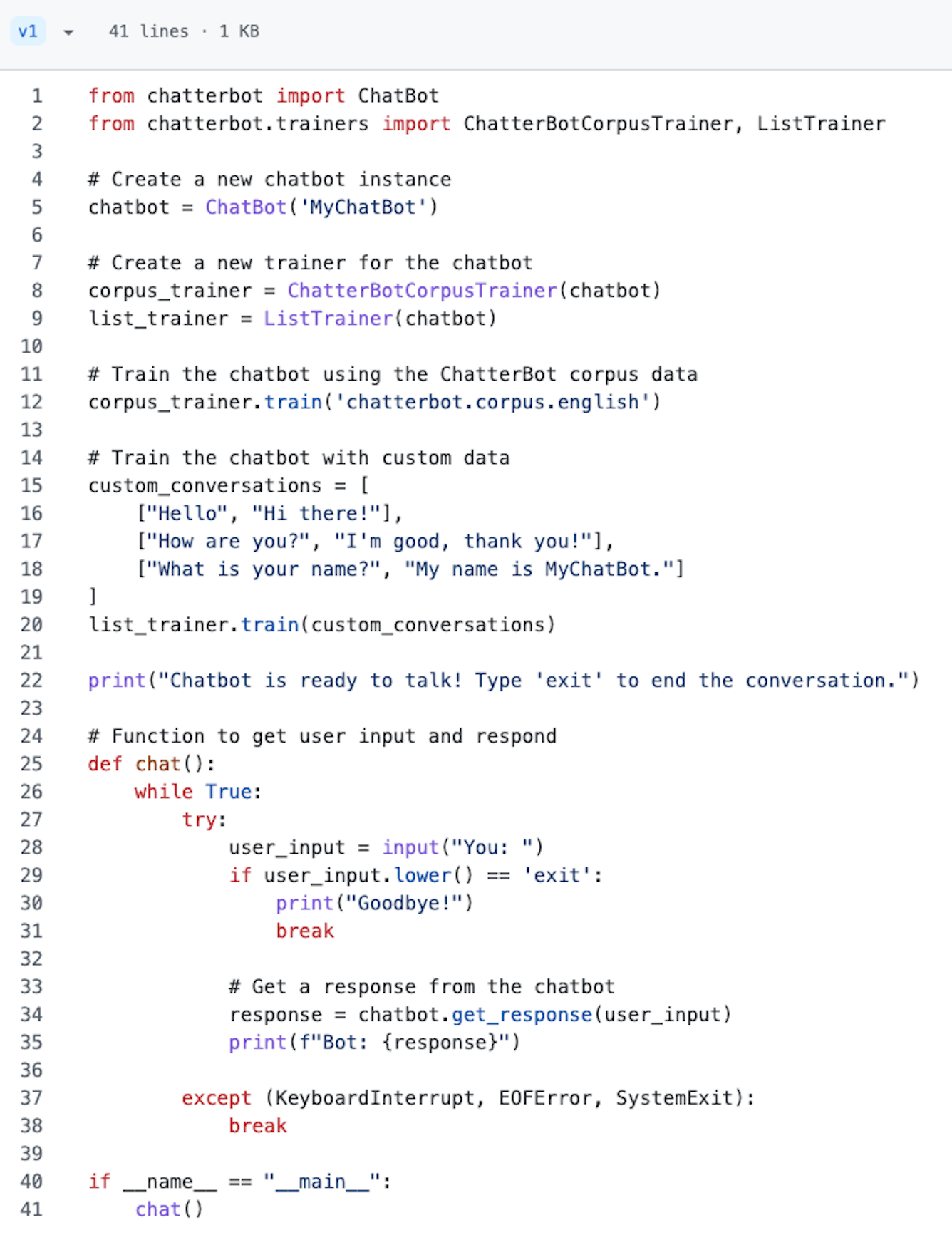

As a test project, I would use Python to create a custom chatbot, which I have never done before. I have used Python extensively. I started with an AI-first approach, using GitHub Copilot and Gemini. I prompted Copilot to create a chatbot, and then I did more prompts for enhancements.

Here is a screenshot of the answer to my first prompt:

And here is a look at the source it initially generated:

It didn’t take long for me to get something running, though things were not yet what I wanted. But this felt like great progress.

With the help of Copilot, I started modifying the pieces of the bot. But after a few changes, I had a sinking feeling. I wondered, “Is this the only way to do this?”

I asked Gemini what the other available Python chatbot frameworks were available besides the Chatterbot framework it chose. I got 14 choices in return! I then used plain Google to seek the same answer and there were even more choices, including some technologies that were not chatbots, but could be used as such. I now had doubts about Chatterbot.

I then wondered which frameworks were popular, and which one was the best. So I asked Gemini, and it said Rasa, which was one of the 14. As Gemini sometimes shows its sources, I could see that this recommendation was from… an answer in Quora? I did not like that.

I then went back to Copilot and asked the same question. It said that Rasa was the best, and Chatterbot was the second-best. Was that from Quora, too? I asked why these choices were the best, and it gave a long answer that did not impress me. I thought, “But if Rasa was number one, why did you give me the second choice in the generated code?” I inferred from the long answer that Chatterbot was simpler to use, which could explain why it was picked. Still, I would have liked to have been told this up front. Of course, you can ask the AI to use a specific framework.

I then made more changes, and got my little application working for a few simple chat. It was great that whatever I wanted to do, the AI gave me what I needed, unlike searching online, where I often only find something related. I then decided I had done enough for my test. Despite my doubts, it still felt like a win.

Then, I started my learning over with a more traditional approach, googling for framework choices, and then reading and watching tutorials. I kept Chatterbot for the sake of this test. I wrote the code by hand without “cheating” by looking at what Copilot had done. This was mostly copying or retyping code samples from the Chatterbot website and tutorials.

It was a very different learning experience. It was certainly more of a struggle, and it took far longer to write code using notes I had from the tutorials. Yet I felt like I understood the framework far better after all of the research. Also, I trusted the authors, who had a lot of experience with chatbots. They were opinionated, and I appreciated the guidance.

It took a while to get the code to roughly where I was before, but I made two other changes I would not have known were possible from the way I used Copilot.

After spending time on the official website and seeing the extent to which it could be modified, I also came away thinking that Chatterbot is not the framework I would use for a serious project, despite its high ranking. Among other things, after ten years of releases, it is written and maintained mostly by one person, has seen few contributions for the past several years, and despite being well-designed, feels more like a learning tool than something you could put into production at work. It’s probably second in the AI rankings due to uptake by beginners and the number of appearances in GitHub, not its quality or extensibility. That got me thinking about the limitations around why AI chooses things.

You might say that choosing a primary framework is not a big problem with Generative AI. But what if you are working on JavaScript project for a couple of weeks, and discover your package.json file now has 50 packages in it, and a few look unfamiliar? Several months ago in a different side project, I noticed AI had apparently brought in a dependent node package called ‘g’ that is ‘not ready for production.’ I had never heard of it, and wondered how it got there.

Conclusions

After my experiment, I read some other materials about issues in using AI for learning, as well as reviewing relevant parts of cognitive science that I was familiar with.

There are many ways to learn how to do something. Professional teachers know many best practices. For instance, students learn better when they are asked to review what they have been taught, and also if they are quizzed later. Tutorials and video classes are great at this. AI has no interest in checking on your progress, it only answers questions.

Yet, the ability to generate customized, working code so quickly is a tremendous teaching advantage… isn’t it?

I am not so sure about that.

Instant code generation poses problems. I am used to learning something, trying code, and then, when it works, feeling great. But when I ask AI for something, I give the output a quick scan, and then jump directly to that wonderful feeling that it works. However, did I really learn how to do it? Having achieved that familiar euphoria for having working code, did I miss the absorption of knowledge from getting it to work myself?

Cognitive science tells us that combining multiple learning methods, like typing an example after reading about a concept, reinforces the information in your brain much more effectively than just reading. I have taken online classes where I merely read the code being discussed, and others where I typed in the code for each lesson. I remembered much more by typing out examples and quiz answers. I also have many memories of how I got good at some framework through hours of “noodling around” for answers. Yet, I probably wasted a lot of time on dead ends and looking for bugs in my code that AI would not have created. So perhaps not all hand coding is worthwhile.

Another problem I have with using AI for something new is that you have to know what to ask for. Yet when you are a beginner, you might not know. It’s quite easy to just go with what it says and not ask further questions about the code, or what other choices existed. With Chatterbot, I didn’t really understand all of the ways it could be enhanced until I read all the documentation.

This isn’t merely a matter of getting proper training, but your incentives on a project. If you just rush through generating lots of code, there’s no one to remind you that you did not consider the implications of what you are using. If your code works, it is quite easy to just keep going, especially if you have deadlines. And yes, this can also happen by pasting in answers from Stack Overflow.

Another issue is trust. If you do not trust AI, will you learn less than if you were taught the same information by a human instructor? This is a big issue, as many do not trust AI.

A recent popular book, The Expectation Effect by David Robinson, makes the case that trust matters a lot for efficiency. It cites medical studies showing that if you do not trust your doctor, your health outcomes will be worse due to that alone, regardless of the quality of the medical treatment. Like the unexpected effectiveness of placebos for some people, your brain is powerful and can direct outcomes one way or the other.

So would someone like me who is skeptical of AI learn less from using it than someone who was a true believer? I think the answer is probably yes! Perhaps this factor explains why people who are most excited about AI seem to get more benefits than we skeptics do. My brain may be resisting some of what AI is producing, but it is more accepting of knowledge from human instructors.

You might counter that trusting other things, such as the “accepted answers” of Stack Overflow is not exactly a foolproof plan! It is certainly not. But I still know there are human beings behind those answers and surely, they… tried out this code before typing it in as an answer… right?

Realizing only now that perhaps I have been trusting the humans of Stack Overflow too much and AI too little, I took some time to ponder that. As I had just created a chatbot with another chatbot, I remembered that one of the original ones from my childhood was meant to be a therapist. For fun, I visited an instance of Eliza online and asked for relationship counseling about her descendants.

Sigh. Even though I have known her for decades, she wasn’t very helpful.

I then asked Copilot the same question. It gave a long, thoughtful answer. I especially liked point number seven:

Balance AI with Human Judgment

Remember that AI is a tool to assist and augment human capabilities, not replace them. Use AI to enhance your decision-making process, but rely on your judgment and expertise for final decisions.

That felt relevant. We still need human instructors to tell us what we did not know we needed, to use proven teaching techniques, to review our progress, and to care how much we are learning. So I will not give up my tutorials and online classes.

I will still use AI for writing code to learn, and I will ask specific technical questions. But I will not let it do all the coding for a new technology, even if it could. Because coding some pieces myself will help me retain more of the new knowledge. Regardless, I will sometimes ask AI to look at my hand-typed code, to let it find bugs and problems faster.

I really did like the answer Copilot gave me on trust. Perhaps I will be less skeptical, and learn more through using it. But I am unsure if that will end up as my accepted answer.